Experimental Design and Statistical Power for Cluster Randomized Cost-Effectiveness Trials

Cluster randomized trials (CRTs) are commonly used to evaluate educational effectiveness. Recently there has been greater emphasis on using these trials to explore cost-effectiveness. However, methods for establishing the power of cluster randomized cost-effectiveness trials (CRCETs) are limited. This study developed power computation formulas and statistical software to help researchers design two- and three-level CRCETs.

Why are cost-effectiveness analysis and statistical power for CRCETs important?

Policymakers and administrators commonly strive to identify interventions that have maximal effectiveness for a given budget or aim to achieve a target improvement in effectiveness at the lowest possible cost (Levin et al., 2017). Evaluations without a credible cost analysis can lead to misleading judgments regarding the relative benefits of alternative strategies for achieving a particular goal. CRCETs link the cost of implementing an intervention to its effect and thus help researchers and policymakers adjudicate the degree to which an intervention is cost-effective. One key consideration when designing CRCETs is statistical power analysis. It allows researchers to determine the conditions needed to guarantee a strong chance (e.g., power > 0.80) of correctly detecting whether an intervention is cost-effective.

How to compute statistical power when designing CRCETs?

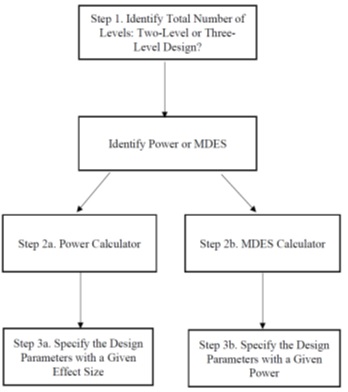

Power and minimum detectable effect size (MDES) computation methods based on multilevel models were developed in this study. We also developed a user-friendly tool (PowerUp!-CEA; https://www.causalevaluation.org/) for planning CRCETs. Applied researchers can follow a three-step procedure to use the tool: (1) specify the number of clustering levels within the study; (2) specify whether their goal is to determine the statistical power given specified effect size and a particular sample size or the MDES given a particular sample and specified level of statistical power; and (3) specify the values of design parameters for the effectiveness data and cost data (e.g., intraclass correlation coefficients, correlations between effectiveness and cost data) and statistical significance. Once these parameters have been specified, the tool automatically computes the statistical power or MDES.

Figure 1. A three-step process to computer power or MDES for CRCETs using PowerUp!-CEA

Full Article Citation:

Li, W., Dong, N., Maynard, R., Spybrook, J. & Kelcey, B. (in press). Experimental design and statistical power for cluster randomized cost-effectiveness trials. Journal of Research on Educational Effectiveness, https://www.tandfonline.com/doi/full/10.1080/19345747.2022.2142177.